(基于docker swarm)

准备镜像,收集的日志格式最好是json格式,否则使用grok进行过滤拆分相对比较麻烦。

docker pull docker.elastic.co/beats/filebeat:7.17.8

docker pull docker.elastic.co/logstash/logstash:7.17.8

docker pull docker.elastic.co/elasticsearch/elasticsearch:7.17.8

docker pull docker.elastic.co/kibana/kibana:7.17.8

编写yml文件

version: '3.7'

services:

filebeat:

image: filebeat:7.17.8

hostname: filebeat

volumes:

- /deploy/filebeat/filebeat.yml:/usr/share/filebeat/filebeat.yml:ro

- /deploy/filebeat/config:/usr/share/filebeat/config

- /deploy/logs:/var/logs

logstash:

image: logstash:7.17.8

hostname: logstash

volumes:

- /deploy/logstash/logstash.yml:/usr/share/logstash/config/logstash.yml

- /deploy/logstash/pipelines.yml:/usr/share/logstash/config/pipelines.yml

- /deploy/logstash/conf.d:/usr/share/logstash/pipeline

- /etc/localtime:/etc/localtime:ro

ports:

- 5044:5044

es01:

image: elasticsearch:7.17.8

hostname: es01

environment:

- node.name=es01

- cluster.name=es-docker-cluster #设置集群名

- network.publish_host=es01

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms1024m -Xmx1024m" #jvm内存分配

- "TZ=Asia/Shanghai"

ulimits: #关闭SWAP

memlock:

soft: -1

hard: -1

volumes:

- /deploy/elasticsearch01/data:/usr/share/elasticsearch/data

- /deploy/elasticsearch01/logs:/usr/share/elasticsearch/logs

- /deploy/elasticsearch01/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- /deploy/elasticsearch01/certs:/usr/share/elasticsearch/config/certs

- /etc/localtime:/etc/localtime:ro

ports:

- 9201:9200

es02:

image: elasticsearch:7.17.8

hostname: es02

environment:

- node.name=es02

- network.publish_host=es02

- cluster.name=es-docker-cluster #设置集群名

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms1024m -Xmx1024m" #jvm内存分配

- "TZ=Asia/Shanghai"

ulimits: #关闭SWAP

memlock:

soft: -1

hard: -1

volumes:

- /deploy/elasticsearch02/data:/usr/share/elasticsearch/data

- /deploy/elasticsearch02/logs:/usr/share/elasticsearch/logs

- /deploy/elasticsearch02/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- /deploy/elasticsearch02/certs:/usr/share/elasticsearch/config/certs

- /etc/localtime:/etc/localtime:ro

ports:

- 9202:9200

es03:

image: elasticsearch:7.17.8

hostname: es03

environment:

- node.name=es03

- network.publish_host=es03

- cluster.name=es-docker-cluster #设置集群名

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms1024m -Xmx1024m" #jvm内存分配

- "TZ=Asia/Shanghai"

ulimits: #关闭SWAP

memlock:

soft: -1

hard: -1

volumes:

- /deploy/elasticsearch03/data:/usr/share/elasticsearch/data

- /deploy/elasticsearch03/logs:/usr/share/elasticsearch/logs

- /deploy/elasticsearch03/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- /deploy/elasticsearch03/certs:/usr/share/elasticsearch/config/certs

- /etc/localtime:/etc/localtime:ro

ports:

- 9203:9200

kibana:

depends_on:

- es01

- es02

- es03

image: kibana:7.17.8

hostname: kibana

environment:

ELASTICSEARCH_HOSTS: '["http://es01:9200","http://es02:9200","http://es03:9200"]'

volumes:

- /deploy/kibana/kibana.yml:/usr/share/kibana/config/kibana.yml

- /etc/localtime:/etc/localtime:ro

ports:

- 5601:5601

networks:

srm_net:

ipam:

driver: default

创建挂载目录和配置文件

#创建挂载目录

mkdir -p /deploy/filebeat/config

mkdir -p /deploy/logstash/conf.d

mkdir -p /deploy/elasticsearch01/{data,logs,certs}

mkdir -p /deploy/elasticsearch02/{data,logs,certs}

mkdir -p /deploy/elasticsearch03/{data,logs,certs}

mkdir -p /deploy/kibana

#创建filebeat配置文件

#cat /deploy/filebeat/filebeat.yml

filebeat.config.inputs:

enabled: true

path: /usr/share/filebeat/config/*.yml #一个微服务日志一个配置文件

reload.enabled: true #自动重载配置

reload.period: 10s

output.logstash:

hosts: ["logstash:5044"]

#config下的配置文件md-mdm-deploy示例

- type: log

enabled: true

paths:

- /var/logs/md-mdm-deploy/*.log

fields:

servicename: md-mdm-deploy

scan_frequency: 10s

#创建logstash配置文件

#cat /deploy/logstash/logstash.yml

pipeline.ordered: auto

http.enabled: true

http.host: 127.0.0.1

http.port: 8080

# cat /deploy/logstash/pipelines.yml

- pipeline.id: main

path.config: "/usr/share/logstash/pipeline/*.conf" #一个微服务日志一个配置文件

#pipeline下的配置文件示例

input{

beats {

port => 5044

}

}

output{

if [fields][servicename] == "md-mdm-deploy" { #需要以filebeat的配置文件保持一致

elasticsearch {

hosts => ["es01","es02","es03"]

index => "md-mdm-deploy-%{+YYYY-MM-dd}"

codec => json {

charset => "UTF-8"

}

}

}

}

#创建elasticsearch的配置文件

#/deploy/elasticsearch01/elasticsearch.yml,es02,es03配置一样,不再赘述。

network.host: 0.0.0.0

cluster.name: "es-docker-cluster"

bootstrap.system_call_filter: false

node.master: true

node.data: true

discovery.zen.minimum_master_nodes: 2

cluster.initial_master_nodes: ["es01", "es02", "es03"]

discovery.seed_hosts: ["es01", "es02", "es03"]

#创建kibana配置文件

#cat /deploy/kibana/kibana.yml

server.name: kibana

server.host: '0.0.0.0'

elasticsearch.hosts: [ "http://es01:9200","http://es02:9200","http://es03:9200" ]

#未生成证书前,可以先注释XPACK相关配置

xpack.monitoring.ui.container.elasticsearch.enabled: true

elasticsearch.username: "elastic" #上一步生成的密码,需要以这里的一致 注意:这里必须使用elastic账号,否则无法使用sentinl

elasticsearch.password: "elastic" #上一步生成的密码,需要以这里的一致

生成XPACK证书

#配置instances.yml配置文件,使容器内的DNS可以解析

instances:

- name: es01

dns:

- es01

- localhost

ip:

- 127.0.0.1

- name: es02

dns:

- es02

- localhost

ip:

- 127.0.0.1

- name: es03

dns:

- es03

- localhost

ip:

- 127.0.0.1

#启动stack文件,再次注意,XPACK证书未生成,相关配置先注释,否则无法正常启动。

docker stack deploy -c docker-stack-elk.yml qzing

#拷贝instances.yml配置文件到容器内

docker cp instances.yml es01:/usr/share/elasticsearch/config/certs

#登录es集群中的一台

docker exec -it --name es01 /bin/bash

#生成证书

bin/elasticsearch-certutil cert --silent --pem --in instances.yml -out bundle.zip

#拷贝证书到宿主机

docker es01:/usr/share/elasticsearch/bundle.zip .

#解压并放入对应目录(解压缩后有ca,es01,es02,es03)

unzip bundle.zip

cp -a ca /deploy/elasticsearch01/certs

cp -a es01 /deploy/elasticsearch01/certs

cp -a ca /deploy/elasticsearch02/certs

cp -a es02 /deploy/elasticsearch02/certs

cp -a ca /deploy/elasticsearch03/certs

cp -a es03 /deploy/elasticsearch03/certs

加入XPACK相关配置并生成密码

#es集群加入XPACK配置

cat /deploy/elasticsearch01/elasticsearch.yml << EOF

xpack.security.enabled: true

xpack.license.self_generated.type: basic

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.certificate_authorities: /usr/share/elasticsearch/config/certs/ca/ca.crt

xpack.security.transport.ssl.certificate: /usr/share/elasticsearch/config/certs/es01/es01.crt

xpack.security.transport.ssl.key: /usr/share/elasticsearch/config/certs/es01/es01.key

xpack.monitoring.enabled: true

xpack.monitoring.collection.enabled: true

xpack.monitoring.collection.interval: 30s

EOF

#重新启动es集群

docker service scale es01=0 es01=1 es02=0 es02=1 es03=0 es03=1

#进入容器生成密码

bin/elasticsearch-setup-passwords auto #生成随机密码(可选)

bin/elasticsearch-setup-passwords interactive #交互模式生成自定义密码

#生成密码后,退出容器

exit

logstash和kibana配置密码连接ES

#logstash的conf.d所有配置文件添加es密码

output{

if [fields][servicename] == "md-mdm-deploy" {

elasticsearch {

user => "elastic" #上一步生成的密码,需要以这里的一致

password => "elastic" #上一步生成的密码,需要以这里的一致

...

#kibana.yml配置文件中添加es密码

xpack.monitoring.ui.container.elasticsearch.enabled: true

elasticsearch.username: "elastic" #上一步生成的密码,需要以这里的一致 注意:这里必须使用elastic账号,否则无法使用sentinl

elasticsearch.password: "elastic" #上一步生成的密码,需要以这里的一致

#重启elk

docker stack depl -c docker-stack-elk.yml qzing

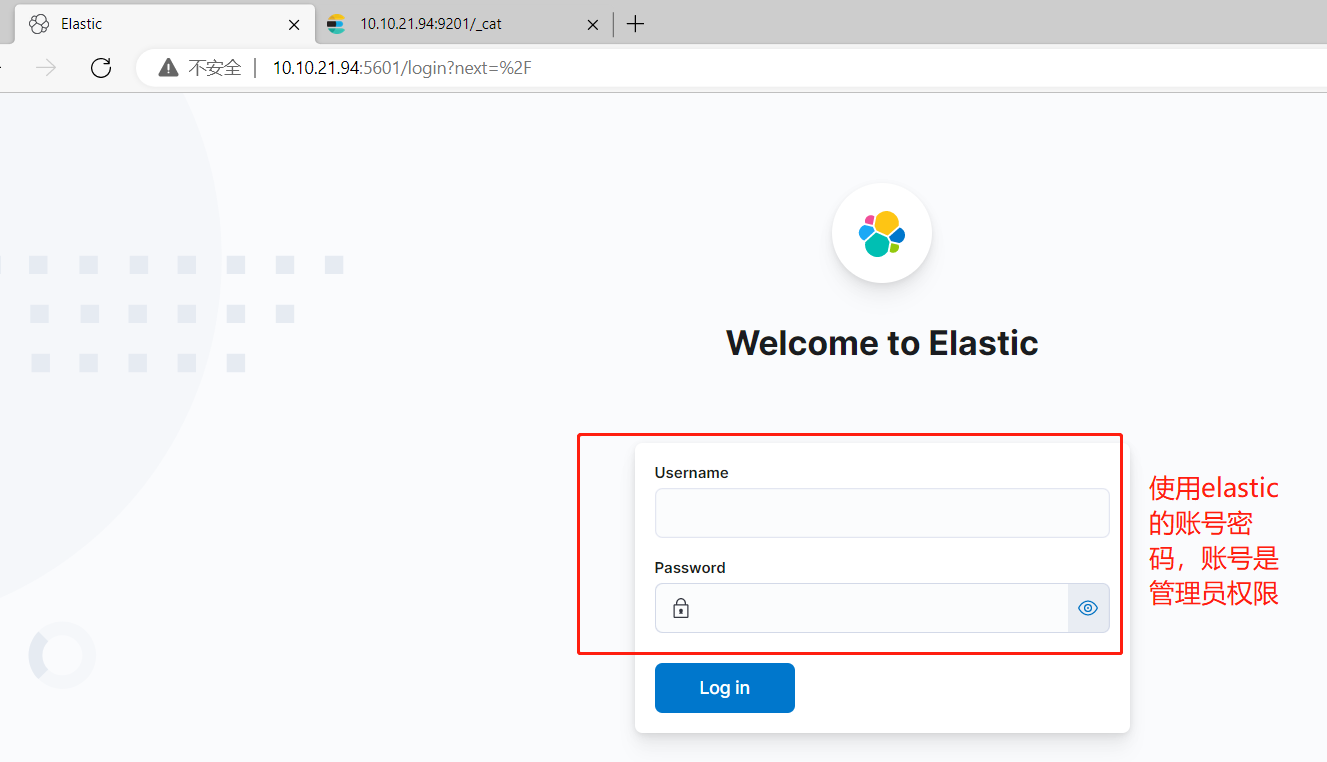

登录ES集群查看是否创建索引

#查看是否创建了微服务相关的索引,如没有创建索引可能需要查看日志进行问题排查

curl -sk http://elastic:elastic@10.10.21.94:9201/_cat/indices

#查看集群状态

curl -sk http://elastic:elastic@10.10.21.94:9201/_cat/health?v

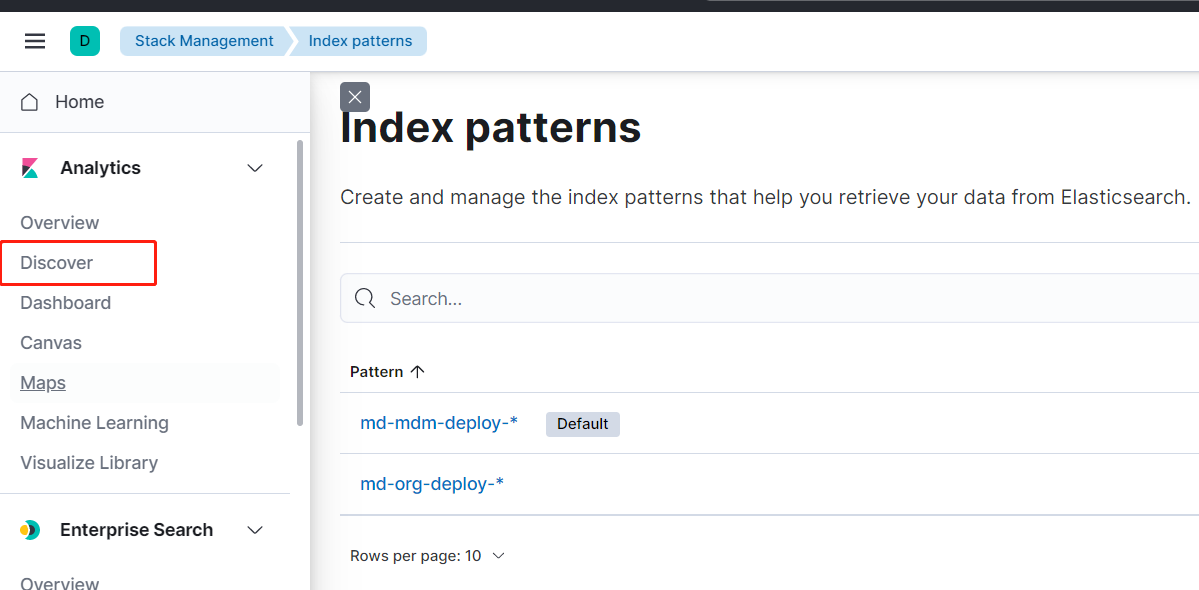

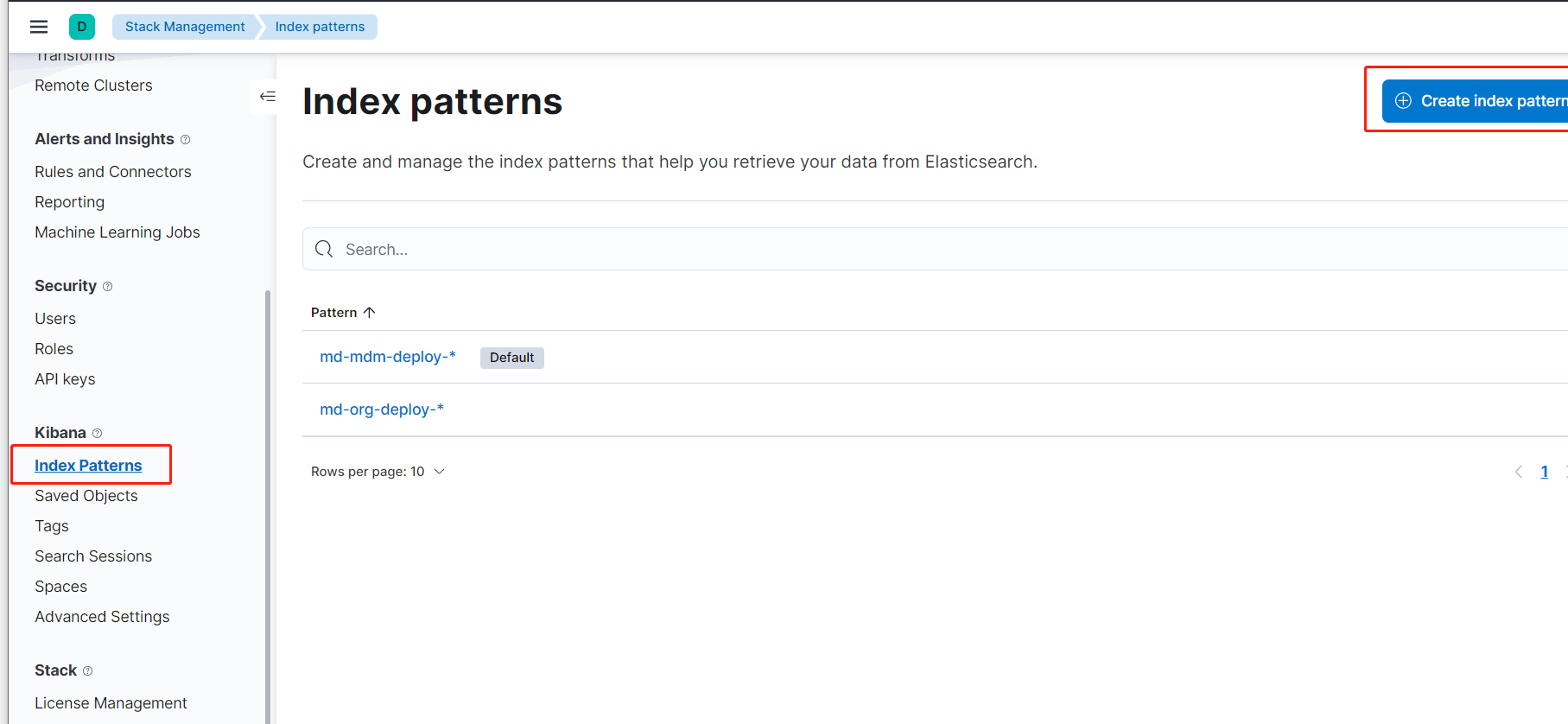

es集群可以看到创建的微服务索引,登录kibana创建索引

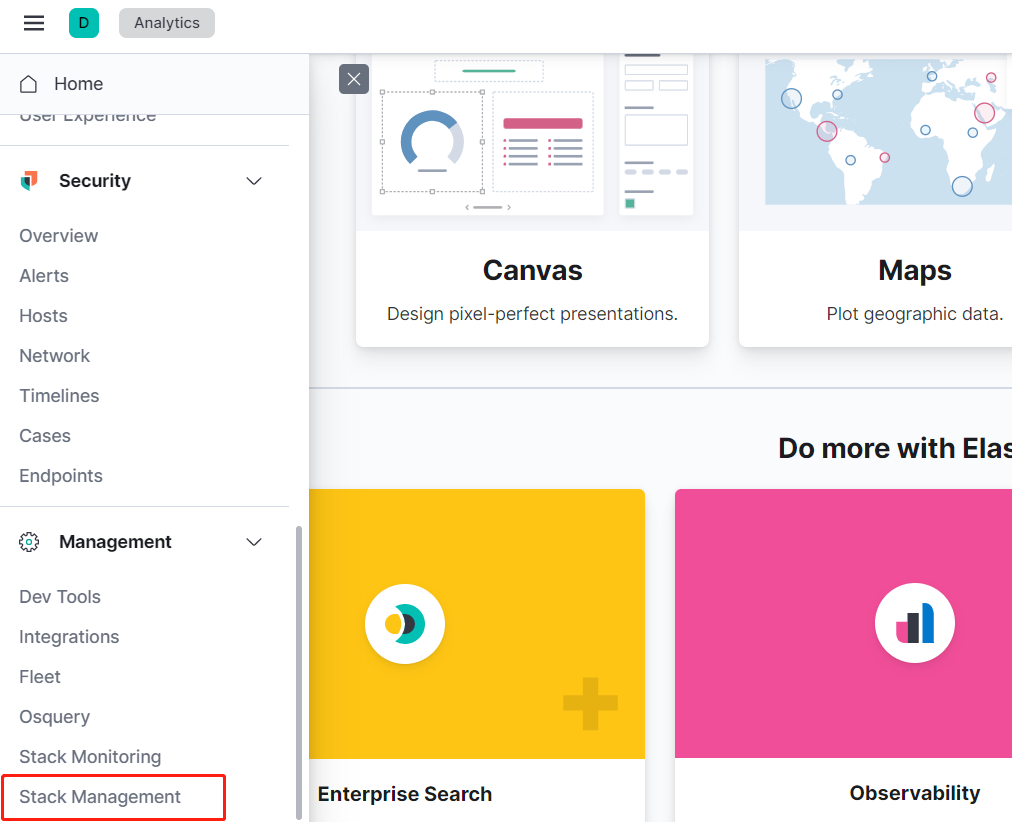

登录后,选择管理界面的[Stack Management]

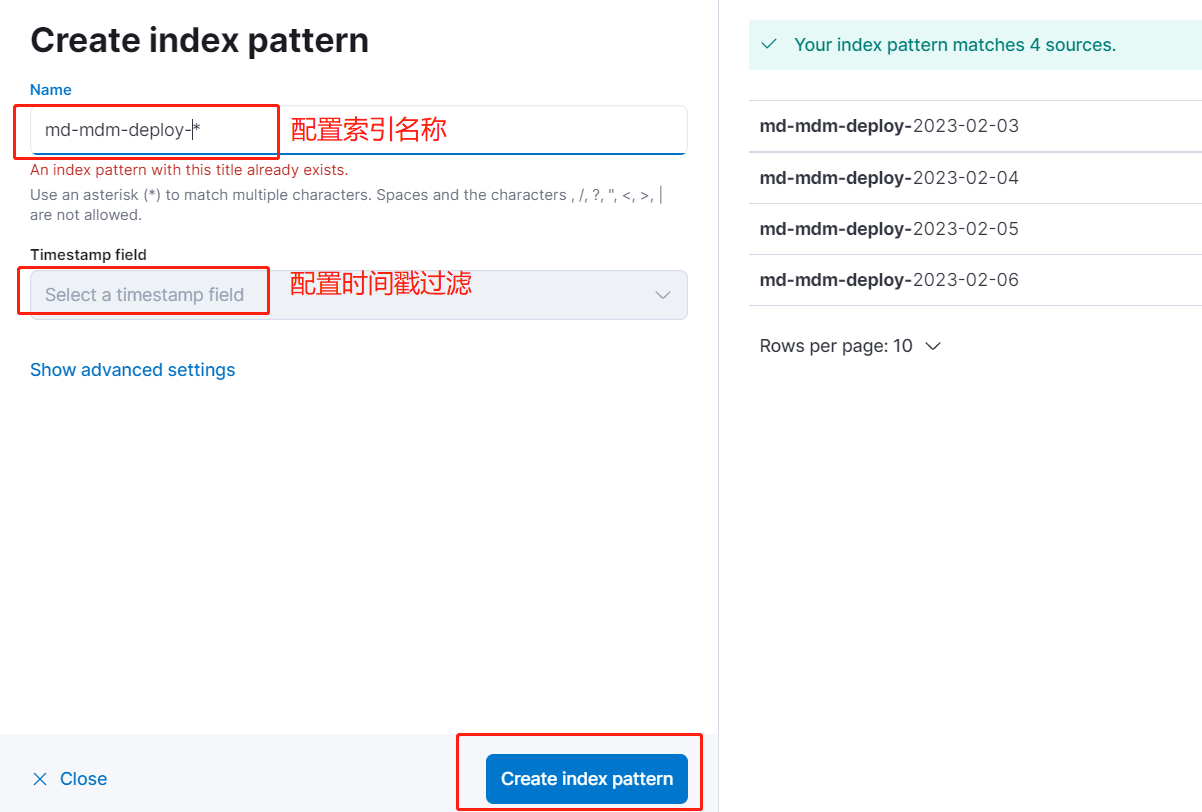

选择kibana,索引模式>创建索引

创建索引

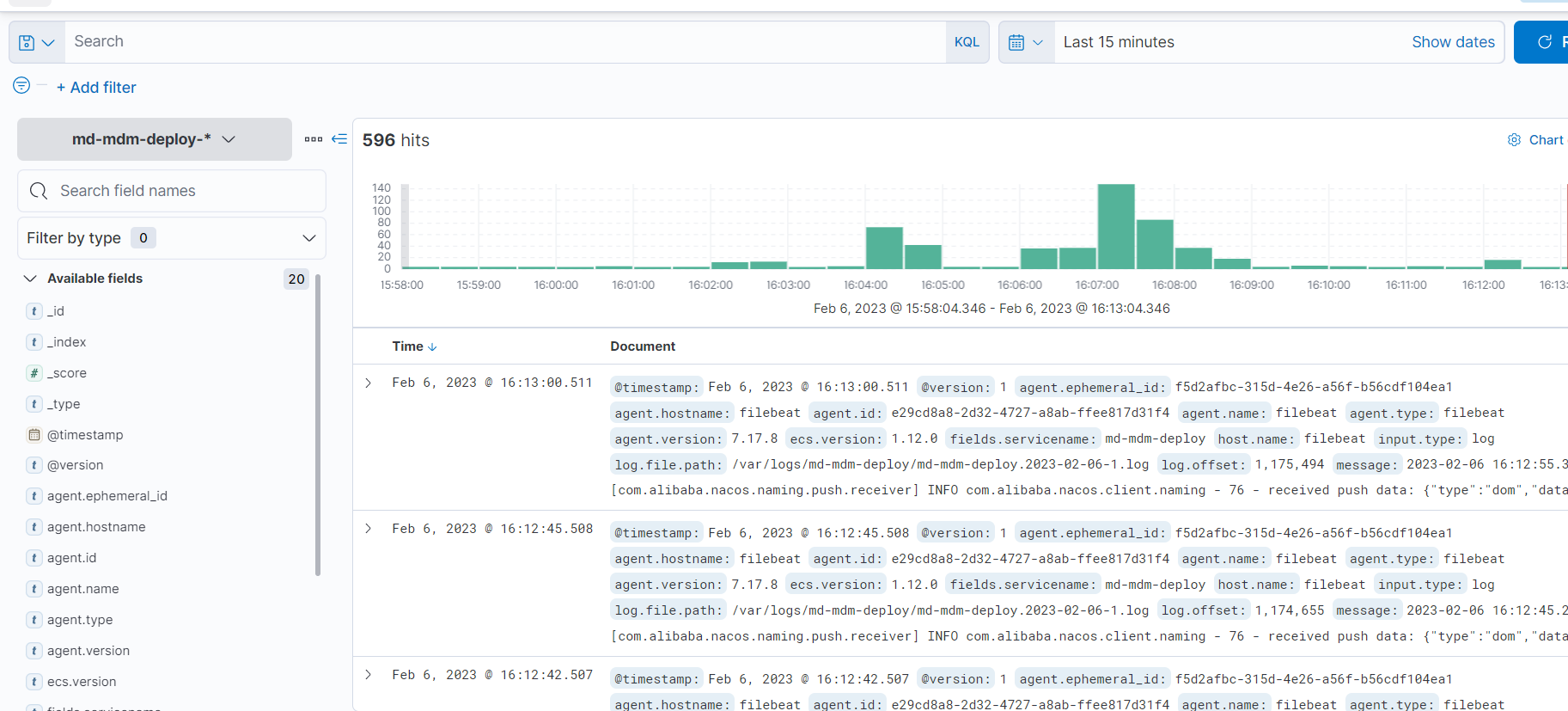

选择Discover,可以看到对应微服务的日志