第一步:下载kube-prometheus配置文件

git clone https://github.com/prometheus-operator/kube-prometheus.git

第二步:修改kube-promethus配置文件

#进入目录

cd kube-prometheus-release-0.10/manifests

#查看哪些镜像需要下载

find ./ -type f |xargs grep 'image: '|sort|uniq|awk '{print $3}'|grep ^[a-zA-Z]|grep -Evw 'error|kubeRbacProxy'|sort -rn|uniq

#输出结果

quay.io/prometheus/prometheus:v2.32.1

quay.io/prometheus-operator/prometheus-operator:v0.53.1

quay.io/prometheus/node-exporter:v1.3.1

quay.io/prometheus/blackbox-exporter:v0.19.0

quay.io/prometheus/alertmanager:v0.23.0

quay.io/brancz/kube-rbac-proxy:v0.11.0

k8s.gcr.io/prometheus-adapter/prometheus-adapter:v0.9.1

k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.3.0

jimmidyson/configmap-reload:v0.5.0

grafana/grafana:8.3.3

#我这边使用了香港服务器进行镜像下载并打包

#先把需要下载的镜像文件放入一个txt文件,并命名为prometheusimage.txt,使用脚本批量下载

for i in `cat prometheusimage.txt`

do

echo "开始下载$i镜像"

docker pull $i

done

#下载完成后,配置tag,方便后续放入个人镜像仓库

docker image tag quay.io/prometheus/prometheus:v2.32.1 zxz.harbor.com/prometheus/prometheus:v2.32.1

docker image tag quay.io/prometheus-operator/prometheus-operator:v0.53.1 zxz.harbor.com/prometheus/prometheus-operator:v0.53.1

docker image tag quay.io/prometheus/node-exporter:v1.3.1 zxz.harbor.com/prometheus/node-exporter:v1.3.1

docker image tag quay.io/prometheus/blackbox-exporter:v0.19.0 zxz.harbor.com/prometheus/blackbox-exporter:v0.19.0

docker image tag quay.io/prometheus/alertmanager:v0.23.0 zxz.harbor.com/prometheus/alertmanager:v0.23.0

docker image tag quay.io/brancz/kube-rbac-proxy:v0.11.0 zxz.harbor.com/prometheus/kube-rbac-proxy:v0.11.0

docker image tag k8s.gcr.io/prometheus-adapter/prometheus-adapter:v0.9.1 zxz.harbor.com/prometheus/prometheus-adapter:v0.9.1

docker image tag k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.3.0 zxz.harbor.com/prometheus/kube-state-metrics:v2.3.0

docker image tag jimmidyson/configmap-reload:v0.5.0 zxz.harbor.com/prometheus/configmap-reload:v0.5.0

docker image tag grafana/grafana:8.3.3 zxz.harbor.com/prometheus/grafana:8.3.3

#进行打包下载上传到master部署服务器

docker save -o prometheusimage.tar zxz.harbor.com/prometheus/prometheus:v2.32.1 zxz.harbor.com/prometheus/prometheus-operator:v0.53.1 zxz.harbor.com/prometheus/node-exporter:v1.3.1 zxz.harbor.com/prometheus/blackbox-exporter:v0.19.0 zxz.harbor.com/prometheus/alertmanager:v0.23.0 zxz.harbor.com/prometheus/kube-rbac-proxy:v0.11.0 zxz.harbor.com/prometheus/prometheus-adapter:v0.9.1 zxz.harbor.com/prometheus/kube-state-metrics:v2.3.0 zxz.harbor.com/prometheus/configmap-reload:v0.5.0 zxz.harbor.com/prometheus/grafana:8.3.3

#上传步骤省略,在部署promehtus的节点进行包的解压

docker load -i prometheusimage.tar

#上传到个人镜像仓库

docker push <镜像名称>

#修改配置文件中默认的image地址为个人镜像仓库地址

sed -i '#quay.io/prometheus/prometheus:v2.32.1#zxz.harbor.com/prometheus/prometheus:v2.32.1#g' *.yaml setup/*.yaml

#其他镜像替换以此类推,不再赘述

#修改nodeselector,固定pod的节点,

sed -i 's#kubernetes.io/os: linux#kubernetes.io/os: grafana#g' grafana-deployment.yaml

sed -i 's#kubernetes.io/os: linux#kubernetes.io/os: prometheus#g' prometheus-prometheus.yaml

sed -i 's#kubernetes.io/os: linux#business: base#g' setup/*deployment.yaml

#我使用了外部的alert所以没有配置,如需配置按下面执行

sed -i 's#kubernetes.io/os: linux#kubernetes.io/os: alertmanager#g' alertmanager-alertmanager.yaml

#node配置上面对应的标签

kubectl label node <prometheus固定节点> kubernetes.io/os=prometheus

kubectl label node <grafana固定节点> kubernetes.io/os=grafana

kubectl label node <alertmanager固定节点> kubernetes.io/os=alertmanager

kubectl label node <基础服务固定节点> kubernetes.io/os=base

#创建命名空间

kubectl apply -f setup/namespace.yaml

#命名空间配置个人镜像仓库的secret,使其可以正常拉取镜像

kubectl create secret docker-registry secret-harbo -n monitoring --docker-server=https://zxz.harbor.com --docker-username=zxz --docker-password=123456 --docker-email=zxz.xxxx.com

第三步:使用storageclass进行prometheus的数据持久化存储

#使用NFS进行数据持久化存储

#安装NFS

yum install rpcbind nfs-utils -y

#启动nfs

systemctl start nfs-server.service

systemctl enable nfs-server.service

#创建挂载目录

mkdir /share-nfs

#修改配置文件

vim /etc/exports

/share-nfs 192.168.100.0/24(rw,sync,no_wdelay,no_root_squash,no_all_squash)

#挂载节点安装客户端

yum install nfs-utils -y

#测试是否能正常挂载

showmount -e <nfs-server ip地址>

Export list for 192.168.100.120:

/share-nfs 192.168.100.0/24

#配置nfs的动态存储

#下载官方的案例并进行修改,下载地址

https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner/blob/master/deploy/

#下载rbac.yaml,deployment.yaml,class.yaml

#修改vim deployment.yaml

...

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 192.168.100.120 #修改为自己部署的nfs服务器

- name: NFS_PATH

value: /share-nfs #修改为自己部署的nfs服务器的共享目录

volumes:

- name: nfs-client-root

nfs:

server: 192.168.100.120 #修改为自己部署的nfs服务器

path: /share-nfs #修改为自己部署的nfs服务器的共享目录

#替换命名空间为kube-system

sed -i 's#namespace: default#namespace: kube-system#g' *.yaml

#进行部署

kubectl apply -f *.yaml

#创建一个pvc进行测试,是否自动创建pv

vim test-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: test-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 27G

storageClassName: nfs-client

#输出结果

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

test-pvc Bound pvc-f6d3ecae-1531-44a1-b4c8-0b38398592cf 27G RWO nfs-client 6s

#配置prometheus配置文件进行数据持久化

vim prometheus-prometheus.yaml

...

storage:

volumeClaimTemplate:

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "nfs-client"

resources:

requests:

storage: 27Gi

第四步:配置grafana数据的持久化存储

#配置mysql进行grafana的数据持久化

vim grafana-config.yaml

apiVersion: v1

kind: Secret

metadata:

labels:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 8.3.3

name: grafana-config

namespace: monitoring

stringData:

grafana.ini: |

[date_formats]

default_timezone = Asia/shanghai #配置时区

[security]

admin_user = admin #配置默认的账号密码

admin_password = admin

[server]

protocol = http

http_addr = 0.0.0.0

http_port = 3000

domain = zxz.grafana-zhangxz.com #域名与ingress的同步

enforce_domain = true

root_url = http://zxz.grafana-zhangxz.com:3000

router_logging = true

enable_gzip = true

[paths]

data = /var/lib/grafana

logs = /var/log/grafana

plugins = /var/lib/grafana/plugins

[auth.basic]

enabled = true

#配置数据持久化

[database]

type = mysql # 数据库可以是mysql、postgres、sqlite3,默认是sqlite3

host = 192.168.100.120:3306 # 只是mysql、postgres需要,默认是127.0.0.1:3306

name = grafana # grafana的数据库名称,默认是grafana

user = grafana # 数据库用户名

password = 123456 # 数据库密码

type: Opaque

#登录mysql配置grafana库和账号密码

create database grafana;

GRANT ALL PRIVILEGES ON grafana.* TO 'grafana'@'%' IDENTIFIED BY '123456';

flush privileges;

第五步:安装metallb负载均衡器

#安装要求 MetalLB需要以下功能才能正常工作:

一个Kubernetes运行 Kubernetes 1.13.0 或更高版本的集群,尚未具有网络负载均衡功能。 一个群集网络配置可以与MetalLB共存。 一些IPv4地址供MetalLB分发。 使用 BGP 操作模式时,您将需要一个或多个能够说话的路由器边界网关协议. 使用 L2 操作模式时,必须允许端口 7946(TCP 和 UDP,可配置其他端口)上的流量,如成员列表

#下载metallb相关配置文件

git clone https://github.com/metallb/metallb.git

#进入目录

cd metallb-0.11/manifests

#查看所需的镜像

cat metallb.yaml | grep image

image: quay.io/metallb/speaker:v0.11.0

image: quay.io/metallb/controller:v0.11.0

#下载好镜像后,更改tag为私人镜像仓库的tag,上传到私人仓库harbor,这里不再赘述

#部署metallb,注意metallb,暂不支持ipvs

#这里没有跨域的需求,所以使用二层网络,需要空出同网段所需的地址,请找网络管理员进行ip预留

vim example-layer2-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: my-ip-space

protocol: layer2

addresses:

- 192.168.100.250/32 #我这里使用了一个地址所以掩码使用32,如果需要配置一个地址池的范围,请自行修改

#开始部署

#创建命名空间

kubectl apply -f namespace.yaml

#创建configmap

kubectl apply -f example-layer2-config.yaml

#安装speaker和controller

kubectl apply -f metallb.yaml

#查看安装结果

kubectl get cm,pod -n metallb-system

第六步:安装kube-promethus

#进入目录

cd kube-prometheus-release-0.10/

kubectl create -f manifests/setup/

kubectl create -f manifests/

#查看结果

kubectl get svc,pod,cm,ep -n monitoring

第七步:配置ingress实现promehtus,grafana,alertmanager的外部访问

#安装ingress-nginx-controller,通过ingress来访问prometheus与grafana

#下载yaml文件,国外网站,下载不动,可能需要翻墙

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.1.1/deploy/static/provider/cloud/deploy.yaml

#查看哪些镜像,请提前下载,这里不再赘述

grep "image: " deploy.yaml

#输出结果

image: k8s.gcr.io/ingress-nginx/controller:v1.1.1

image: k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.1.1

image: k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.1.1

#应用yaml文件

kubectl apply -f deploy.yaml

#查看部署结果

kubectl get po -n ingress-nginx

#输出结果

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-scczn 0/1 Completed 0 17m

ingress-nginx-admission-patch-rp67g 0/1 Completed 1 17m

ingress-nginx-controller-776889d8cb-sbldc 1/1 Running 0 15m

#

#配置prometheus-ingress

kubectl apply -f prometheus.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: prometheus-ingress

namespace: monitoring

annotations:

kubernetes.io/ingress.class: nginx

spec:

rules:

- host: zxz.prometheus-zhangxz.com #配置域名

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: prometheus-k8s

port:

number: 9090

status:

loadBalancer:

#配置grafana-ingress

kubectl apply -f grafana.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: grafana-ingress

namespace: monitoring

annotations:

kubernetes.io/ingress.class: nginx

spec:

rules:

- host: zxz.grafana-zhangxz.com

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: grafana

port:

number: 3000

status:

loadBalancer:

#配置aletmanager-ingress

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: grafana-ingress

namespace: monitoring

annotations:

kubernetes.io/ingress.class: nginx

spec:

rules:

- host: zxz.alert-zhangxz.com

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: alertmanager-main

port:

number: 9093

status:

loadBalancer:

#查看结果,因为配置了网络负载均衡器,所以只要在能访问ip的节点上配置hosts即可访问

kubectl get ingress -n monitoring

#输出结果

NAME CLASS HOSTS ADDRESS PORTS AGE

grafana-ingress <none> zxz.grafana-zhangxz.com 192.168.100.250 80 15d

prometheus-ingress <none> zxz.prometheus-zhangxz.com 192.168.100.250 80 19d

alert-ingress <none> zxz.alert-zhangxz.com 192.168.100.250 80 79d

#访问,在个人电脑上配置hosts,将暴露的ip映射到ingress域名即可

vim hosts.txt

192.168.100.250 zxz.grafana-zhangxz.com

192.168.100.250 zxz.prometheus-zhangxz.com

192.168.100.250 zxz.alert-zhangxz.com

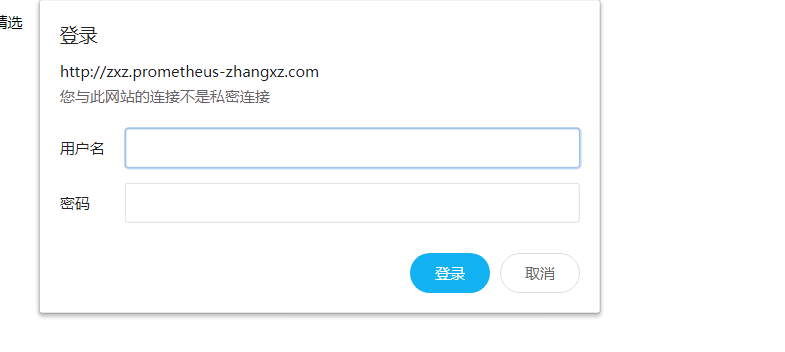

第八步:配置prometheus,alertmanager BASIC身份验证,确保使⽤ingress代理的应⽤具备安全验证

#安装htpasswd,创建账号密码

$ htpasswd -c auth zhangxz

New password: <bar>

New password:

Re-type new password:

Adding password for user zhangxz

#创建secret

kubectl create secret generic basic-auth --from-file=auth -n monitoring

#ingress-prometheus.yaml 配置文件新增以下内容

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: prometheus-ingress

namespace: monitoring

annotations:

kubernetes.io/ingress.class: nginx

# type of authentication

nginx.ingress.kubernetes.io/auth-type: basic

# name of the secret that contains the user/password definitions

nginx.ingress.kubernetes.io/auth-secret: basic-auth

# message to display with an appropriate context why the authentication is required

nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required - zhangxz'

spec:

rules:

- host: zxz.prometheus-zhangxz.com

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: prometheus-k8s

port:

number: 9090

status:

loadBalancer:

#应用yaml文件

kubectl apply -f ingress-promethes.yaml

#查看效果

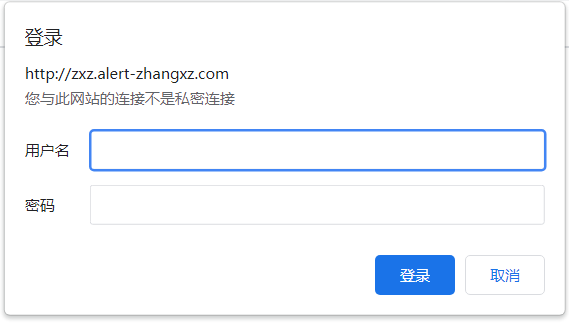

#ingress-alertmanager.yaml ingress配置文件新增以下内容

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: alert-ingress

namespace: monitoring

annotations:

kubernetes.io/ingress.class: nginx

# type of authentication

nginx.ingress.kubernetes.io/auth-type: basic

# name of the secret that contains the user/password definitions

nginx.ingress.kubernetes.io/auth-secret: basic-auth

# message to display with an appropriate context why the authentication is required

nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required - zhangxz'

spec:

rules:

- host: zxz.alert-zhangxz.com

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: alertmanager-main

port:

number: 9093

#应用yaml文件

kubectl apply -f ingress-alertmanager.yaml

#查看效果

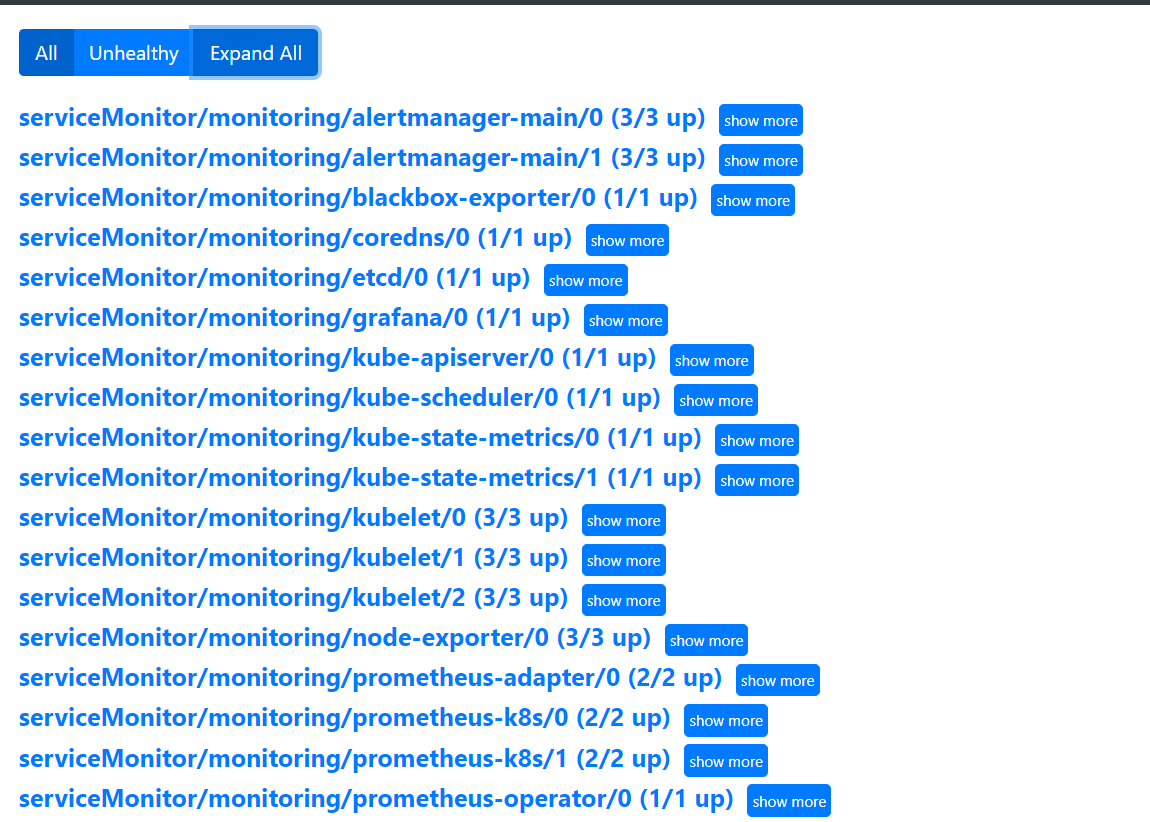

第九步:对k8s组件进行监控

因k8s是二进制部署,所以k8s组件目前无法直接监控,添加自定义servicemonitor来监控k8s组件

#查看自带的servicemonitor

kubectl get servicemonitor -n monitoring

#输出结果

NAME AGE

alertmanager-main 14m

blackbox-exporter 14m

coredns 14m

grafana 14m

kube-apiserver 14m

kube-controller-manager 14m

kube-scheduler 14m

kube-state-metrics 14m

kubelet 14m

node-exporter 14m

prometheus-adapter 14m

prometheus-k8s 14m

prometheus-operator 14m

#发现大部分k8s组件的servicemonitor有配置(除了etcd),但因我们是二进制部署无法自动发现,需要创建service和endpoint来指定到组件相关端口

#首先先看下各组件的匹配标签()

for i in `kubectl get servicemonitor -n monitoring |grep kube |grep -v "kube-apiserver\|kube-state-metrics" | awk '{print $1}'` ; do kubectl get servicemonitor $i -o yaml -n monitoring | sed -n '/matchLabels/ {h ; n ; p }' ; done

#输出结果

app.kubernetes.io/name: kube-controller-manager

app.kubernetes.io/name: kube-scheduler

app.kubernetes.io/name: kubelet

#创建文件夹存放servicemonitor相关yaml文件

mkdir svcmonitor

#配置对应的service,endpoint(需要配置的k8s组件kube-schduler,kubelet,kube-controller-manager)

#kube-schduler.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

labels:

app.kubernetes.io/name: kube-scheduler #对应servicemonitor中的标签参数

name: kube-scheduler

namespace: kube-system

spec:

ports:

- name: https-metrics

port: 10259

protocol: TCP

targetPort: 10259

type: ClusterIP

status:

loadBalancer: {}

---

apiVersion: v1

kind: Endpoints

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

labels:

app.kubernetes.io/name: kube-scheduler #对应servicemonitor中的标签参数

name: kube-scheduler

namespace: kube-system

subsets:

- addresses:

- ip: 192.168.100.100

ports:

- name: https-metrics

port: 10259

protocol: TCP

#kubelet.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/managed-by: prometheus-operator

app.kubernetes.io/name: kubelet

name: kubelet

namespace: kube-system

spec:

ports:

- name: https-metrics

port: 10250

protocol: TCP

targetPort: 10250

- name: http-metrics

port: 10255

protocol: TCP

targetPort: 10255

- name: cadvisor

port: 4194

protocol: TCP

targetPort: 4194

type: ClusterIP

status:

loadBalancer: {}

---

apiVersion: v1

kind: Endpoints

metadata:

labels:

app.kubernetes.io/managed-by: prometheus-operator

app.kubernetes.io/name: kubelet

name: kubelet

namespace: kube-system

subsets:

- addresses:

- ip: 192.168.100.100

targetRef:

kind: Node

name: 192.168.100.100

- ip: 192.168.100.101

targetRef:

kind: Node

name: 192.168.100.101

- ip: 192.168.100.102

targetRef:

kind: Node

name: 192.168.100.102

ports:

- name: https-metrics

port: 10250

protocol: TCP

- name: http-metrics

port: 10255

protocol: TCP

- name: cadvisor

port: 4194

protocol: TCP

#kube-controller-manager.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

labels:

app.kubernetes.io/name: kube-controller-manager

name: kube-controller-manager

namespace: kube-system

spec:

ports:

- name: https-metrics

port: 10257

protocol: TCP

targetPort: 10257

type: ClusterIP

status:

loadBalancer: {}

---

apiVersion: v1

kind: Endpoints

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

labels:

app.kubernetes.io/name: kube-controller-manager

name: kube-controller-manager

namespace: kube-system

subsets:

- addresses:

- ip: 192.168.100.100

ports:

- name: https-metrics

port: 10257

protocol: TCP

#etcd.yaml(没有自带servicemonitor,所以需要手动创建)

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

labels:

k8s-app: etcd

name: etcd

namespace: monitoring

spec:

endpoints:

- bearerTokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token

interval: 30s

port: https-metrics

scheme: https

tlsConfig:

caFile: /etc/prometheus/secrets/etcd-certs/ca.pem

certFile: /etc/prometheus/secrets/etcd-certs/etcd.pem

insecureSkipVerify: true

keyFile: /etc/prometheus/secrets/etcd-certs/etcd-key.pem

serverName: kubernetes

jobLabel: k8s-app

namespaceSelector:

matchNames:

- kube-system

selector:

matchLabels:

k8s-app: etcd

---

apiVersion: v1

kind: Service

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

labels:

k8s-app: etcd

name: etcd

namespace: kube-system

spec:

clusterIP: None

ports:

- name: https-metrics

port: 2379

protocol: TCP

targetPort: 2379

type: ClusterIP

status:

loadBalancer: {}

---

apiVersion: v1

kind: Endpoints

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

labels:

k8s-app: etcd

name: etcd

namespace: kube-system

subsets:

- addresses:

- ip: 192.168.100.100

ports:

- name: https-metrics

port: 2379

protocol: TCP

#证书创建secret

kubectl create secret generic etcd-certs --from-file=/etc/kubernetes/ssl/ca.pem --from-file=/etc/kubernetes/ssl/etcd.pem --from-file=/etc/kubernetes/ssl/etcd-key.pem -n monitoring

#挂载证书到prometheus

vim prometheus-prometheus.yaml

...

secrets:

- etcd-certs

...

#应用yaml文件

kubectl apply -f svcmonitor/etcd.yaml

kubectl apply -f prometheus-prometheus.yaml

#查看效果

第十步:添加邮件,企业微信,钉钉告警

#打开alertmanager配置文件的yaml文件

#vim manifests/alertmanager-secret.yaml

...

alertmanager.yaml: |-

"global":

"resolve_timeout": "30m"

"smtp_from": "xxxxxxxxxx@126.com"

"smtp_hello": "xxxxxxxxxx@126.com"

"smtp_smarthost": "smtp.126.com:25"

"smtp_auth_username": "xxxxxxxxxx@126.com"

"smtp_auth_password": "xxxxxxxxxxxxxxxx"

"smtp_require_tls": false

"inhibit_rules":

- "equal":

- "namespace"

- "alertname"

"source_matchers":

- "severity = critical"

"target_matchers":

- "severity = warning"

- "equal":

- "namespace"

- "alertname"

"source_matchers":

- "severity = warning"

"target_matchers":

- "severity = info"

"receivers":

- "name": "email"

"email_configs":

- "to": "xxxxxxxxxxxx.com"

"send_resolved": true

- "name": "Watchdog"

"email_configs":

- "to": "xxxxxxxxxxxx.com"

"send_resolved": true

#企业微信告警,可以访问我另一篇文章<alert新增微信,钉钉告警>,查看如何安装

- "name": "wechat"

"wechat_configs":

- "to party": 1

"agent_id": 1000001

"corp_id": "xxxxxxxxxxxxxxx"

"to_user": "@all"

"api_secret": "xxxxxxxxxxxxxxxx"

"send_resolved": true

#钉钉告警需要安装一个webhook工具,可以访问我另一篇文章<alert新增微信,钉钉告警>,查看如何安装

- "name": "dingtalk"

"webhook_configs":

- "url": 'http://localhost:8060/dingtalk/webhook1/send'

"send_resolved": true

"route":

"group_by":

- "alertname"

"group_interval": "5m"

"group_wait": "30s"

"receiver": "email"

"repeat_interval": "12h"

"routes":

- "matchers":

- "alertname = Watchdog"

"receiver": "Watchdog"

"continue": true

- "matchers":

- "severity = warning"

"receiver": "email"

...

#应用yaml文件

kubectl apply -f manifests/alertmanager-secret.yaml

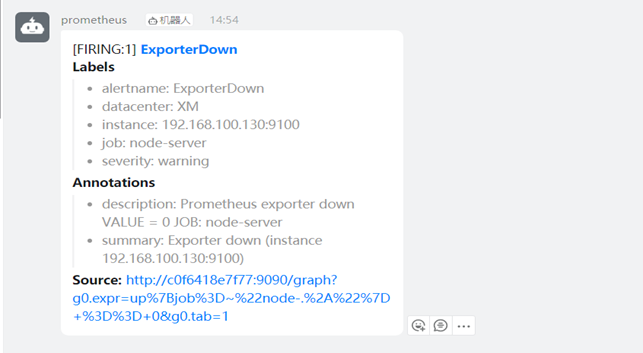

#测试告警并查看效果

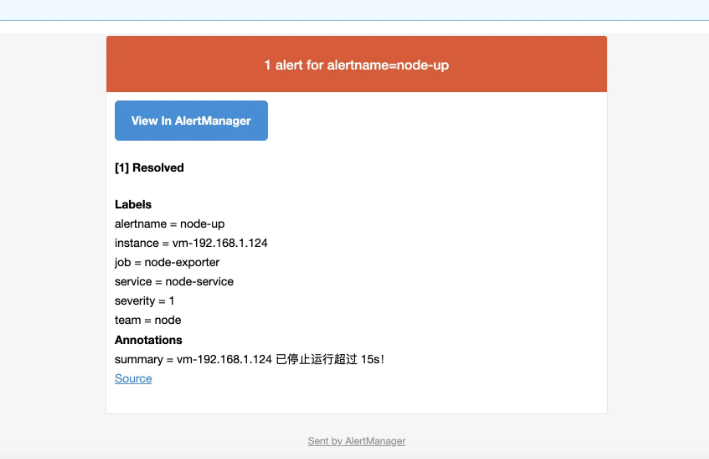

邮件

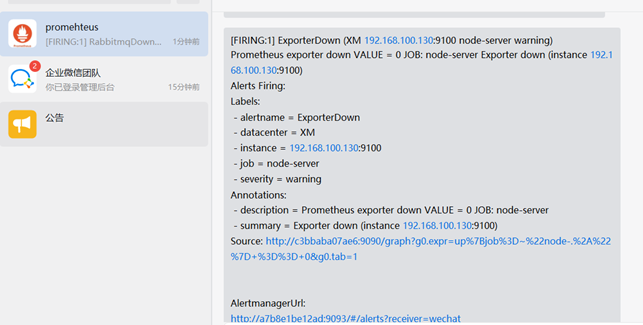

企业微信

钉钉